Serverless GPUs: 4 Cloud Providers Compared

June 11, 2025 by @anthonynsimon

So, you've built a cool AI feature. Maybe it's a tool that generates images, transcribes audio, or provides intelligent search for your app. You've tested it on your laptop, and it works. But now you want to deploy it for others to use, and you've hit a wall: your computer isn't a server, and running these models requires a powerful GPU that you don't have lying around.

What are your options? You could rent a dedicated GPU server from a cloud provider, but that's expensive. You'd be paying for it 24/7, even when no one is using your app. It feels like buying a whole bus just to drive one person to the store.

This is where serverless GPUs come in. They offer a simple promise: you get access to powerful hardware when you need it and pay only for the seconds you use. When your app is idle, you pay nothing.

This approach can make things much easier for anyone building without a massive budget. In this guide, I'll walk you through what serverless GPUs are and compare four popular providers: Replicate, Fal.ai, Koyeb, and Runpod.

What exactly is a serverless GPU?

At its core, "serverless" doesn't mean there are no servers. Of course, there are - they just aren't yours to manage.

Instead of renting a full virtual machine with a GPU attached, you package your code (usually in a Docker container) and hand it to the provider. They take care of the rest:

- Finding a GPU: When a request comes in, the platform finds an available GPU.

- Running Your Code: It starts your container, runs your code to process the request, and returns the result.

- Shutting Down: After a short timeout, it shuts everything down.

The magic is in the pay-per-use billing. If your API gets 100 requests that each take 5 seconds to run, you only pay for 500 seconds of compute time, not the entire day. For many applications, this is drastically cheaper.

The main trade-off is something called a "cold start": the small delay (from a few seconds to a minute) it takes to spin up your container for the very first request after a period of inactivity.

Next, let's look at some serverless GPU providers.

Runpod (sponsor)

Runpod is known in the AI community for offering competitive pricing. They achieve this through a mix of sourcing GPUs from data centers and their "Community Cloud," where individuals can rent out their personal high-end consumer GPUs.

- Key Feature: Price. Runpod is often one of the most affordable options. Their pricing model distinguishes between "Flex" (interruptible, lower cost) and "Active" (standard) workers, giving you more ways to save.

- Who it's for: Developers who are cost-sensitive and comfortable with a more hands-on, infrastructure-focused platform. If your main goal is to maximize GPU performance for your budget, Runpod is a strong contender.

Fal.ai

Fal.ai's stated mission is to improve developer experience and speed. They have focused on reducing cold-start times, claiming they can get applications running in under 5 seconds. Their platform is designed to help you go from code to a live, scalable endpoint quickly.

- Key Feature: Fast cold starts and a streamlined developer experience. They also offer real-time, multi-user capabilities out of the box.

- Who it's for: Developers building interactive applications where response time is critical. If a long cold start will negatively impact your user experience, Fal.ai is worth considering.

Koyeb

Koyeb is a general-purpose serverless platform that supports web apps, databases, and workers. The addition of GPUs to their fleet allows you to host your entire application - from the front-end website to the back-end API and the GPU-powered inference endpoint - all on the same platform.

- Key Feature: Unified platform. You don't need to stitch together a web host and a separate GPU provider. Koyeb handles everything with a simple Git-based deployment workflow. They also offer a wide range of GPU configurations, including multi-GPU setups.

- Who it's for: Teams that want to simplify their infrastructure. If you're already running other services on Koyeb, adding a GPU endpoint is straightforward.

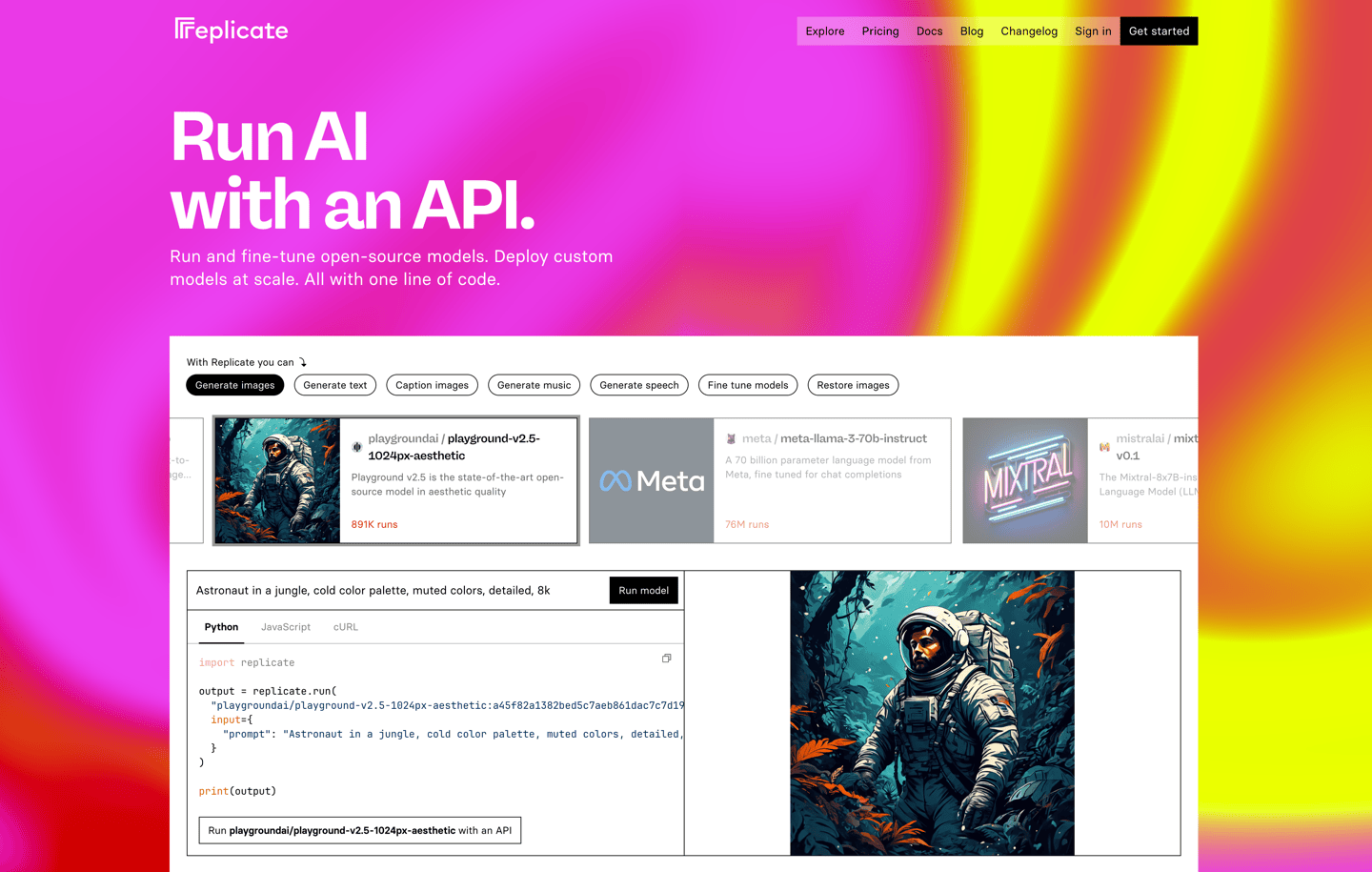

Replicate

Replicate started as a library of pre-configured AI models that anyone could run with a simple API call, often without needing a Dockerfile. They've since expanded to let you run your own custom models, but their strength remains ease of use.

- Key Feature: A large library of public models and a simple API. If you need to run a popular model like Llama 3 or Stable Diffusion, chances are Replicate has it ready to go.

- Who it's for: Developers who want to move fast and not get bogged down in configuration. It's well-suited for quickly adding AI features to an existing app or for those new to Docker and GPUs.

Side-by-side comparison

Here’s a quick look at how the providers stack up on a few common GPUs. Prices are per hour for easier comparison. "Cold Start" is a general estimate and can vary based on your model size and container.

| Provider | L4 (24GB) | A100 (80GB) | H100 (80GB) | Ideal For |

|---|---|---|---|---|

| Fal.ai | Not listed | ~$1.00/hr (40GB) | ~$1.89/hr | Fast cold starts & DX |

| Koyeb | ~$0.70/hr | ~$2.00/hr | ~$3.30/hr | All-in-one platform |

| Runpod | ~$0.48/hr | ~$2.17/hr | ~$3.35/hr | Wide range of affordable GPUs |

| Replicate | Not listed | ~$5.04/hr | ~$5.49/hr | Ease of use & model library |

Things to watch out for

Serverless GPUs are powerful, but there are a few things to keep in mind:

- Cold Starts: I mentioned this earlier, but it's the biggest trade-off. If your application needs an instant response every single time, you might need to configure a minimum number of workers to always be "warm" (and running), which adds to the cost.

- Containerization: Most of these platforms require your code to be packaged in a Docker container. If you've never used Docker before, there's a small learning curve. (Replicate is a partial exception here, as you can run many public models without your own container).

- Data Locality: Your model weights and any data you need have to be loaded into the container when it starts. If you're pulling a 50GB model from a slow storage bucket, your cold start will be painfully long. Most providers have ways to cache models to speed this up.

Which one should you choose?

There's no single "best" provider - it depends entirely on what you're building.

- If price is your primary concern, start with Runpod.

- If response time is critical, check out Fal.ai.

- If you want to host your whole stack in one place, look at Koyeb.

- If you want the easiest path to a live model, go with Replicate.

The nice thing is that they are all pay-as-you-go, so the cost of trying them out is low (but be sure to set up a budget cap or alert).

For a more detailed price comparison across more providers, I also maintain this list of GPU prices across cloud providers.